#028 - A model rebellion

Two competing pieces of AI news landed within a few days of each other. What does this mean for the space?

You're reading Complex Machinery, a newsletter about risk, AI, and related topics. (You can also subscribe to get this newsletter in your inbox.)

More questions than answers

Details on the recently-unveiled "Stargate" AI investment project are sparse. What we know thus far boils down to:

- Stargate represents $500B of money, spent over four years, destined for AI projects in America.

- This private investment includes at least $100M from SoftBank. You may remember SoftBank as having bankrolled gems such as Greensill Capital (NB: accused of fraud), WeWork (no fraud but still raised a few eyebrows), and WireCard (accused of massive fraud, with a hint of a spy scandal for good measure).

- The first round – or possibly all of it? – will go to AI infrastructure projects like datacenters and the power plants to drive them.

This isn't nearly enough information for me to draw meaningful conclusions. But it's enough for me to raise questions:

How could all this Stargate money improve the state of AI for B2C and B2B buyers? GenAI is still in its hopeful stages, looking for practical use cases. Building new datacenters on which to run this not-yet-there and may-not-make-it AI feels like putting the cart before the horse. Or perhaps growing the casino rather than improving gamblers' odds.

Who benefits from all this AI infrastructure, then? The increase in capacity should entice more companies to try AI, and give them room to run the use cases they surface. And if their use cases don't pan out? Well, the infrastructure providers still get paid. It's sort of like running a stock exchange – you make money on every trade, winners and losers alike.

Is this the best use of capital? Is there another place to invest $500B that is less risky? Some mix of "more likely to pan out" and "lower cost" and "higher return for the world"?

("Educating people on what AI actually can and cannot do" seems like a good bet. But that's just me.)

I plan to revisit these questions as the deal solidifies.

Coming up short

There's a deeper issue with the $500B Stargate funding. It's easier to spot if you put on your trader goggles:

Making money on Wall Street involves capitalizing on price movements. You buy some under-valued shares and sell them when prices rise – the old "buy low, sell high" routine. You can also make money when prices fall; you simply reorder those steps to "sell high, buy low." This is known as shorting.

To short a stock is to express your belief that it is temporarily overpriced. Especially if you think it's overpriced because of hype or dishonesty. You make your money when everyone else comes around to your way of thinking and the share price tanks.

The trick with shorting is that it's not enough to figure out that something is overpriced before everyone else does. You have to spot the overpriced asset, then hold your short position till the prices fall. Anything that sustains the inflated prices, or pushes them higher, postpones your payout date. You're in for a painful ride if you need that money sooner.

This happened during the US mortgage crisis: plenty of people saw that the housing market was overheating, but they took up their short positions too early. House prices kept creeping up and mortgages kept getting hotter, so they had to bail. Only a handful of investors – famously, John Paulson and Michael Burry –timed it right and walked away with a windfall.

There's a similar game afoot in AI. The technology shows plenty of potential; but as most valuations are based on wishful thinking Extreme Optimism™ , I wouldn't blame you for saying that the field is overheated.

When I look at this AI infrastructure deal, then, what I see is even more hot air pumped into AI. It's the equivalent of housing valuations going up, up, up after people had staked short positions on mortgages. If AI does turn out to be a bubble, the extra funding – having widened the distance between fundamental and perceived value – could make the collapse even more painful for investors.

But for now, if you are shorting Bullshit AI™, your position just got harder to hold.

A deep-seeking missile

Or, maybe not.

For the past two years we've been told that genAI is expensive, power-hungry, and only available to megacorps with massive datacenters. AI startup DeepSeek has demonstrated otherwise. Its "R1" model performs roughly on par with offerings from larger firms like OpenAI (parent of ChatGPT), at a fraction of the training cost and electricity demand. It's also open-source, so you can run it on your own hardware without paying DeepSeek a dime.

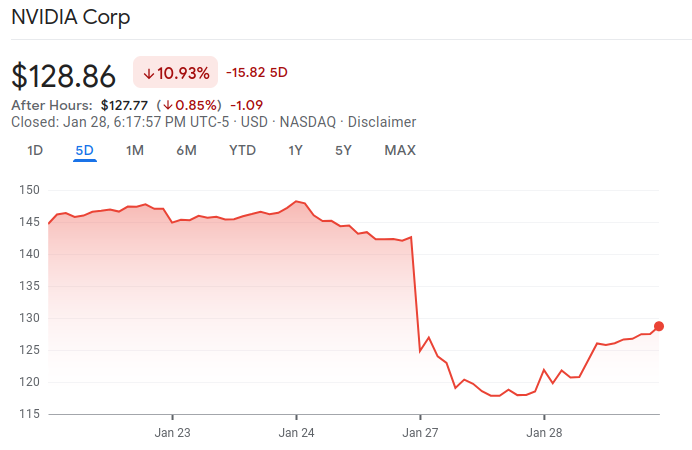

Does DeepSeek represent a threat to the incumbent AI giants? According to financial markets, yes. The news triggered a selloff of $1 trillion in AI-related share value. (Some of that has since recovered.)

If you were shorting AI and managed to cash out, you're feeling pretty good about yourself right now.

What does the DeepSeek news mean for everyone else?

Companies interested in using genAI face a much lower barrier to adoption. They can grab the model to run on their own hardware, which means they aren't paying OpenAI on a metered, per-token basis. (Think of a token as a fraction of a word – one or two syllables. You see how even modest AI work can quickly add up.) Some of them will slash their AI R&D budget by using DeepSeek to build proof-of-concept apps, then porting those ideas to OpenAI or a similar provider to run in production.

These companies still face model risk, reputation risk, and other concerns inherent to deploying AI-enabled apps. But taking the R&D budget risk off the table is a Big Deal™.

Individuals interested in using genAI, so long as they have the technical aptitude, are in the same boat as the companies. A few people have told me that they are running DeepSeek's R1 on their laptop.

Expect this to lead to more AI-powered startups as founders can experiment in-house for as-good-as-free.

(When I think of an open source model that can compete with commercial offerings, it's hard to not draw parallels to the late-1990s era of open source software. That led to massive growth of the tech sector, a flood of self-taught software developers, cloud computing, and the rise of AI. More on that in a future newsletter.)

Consumers will face even more AI Crammed Into Places They Don't Want. That's the double-edged sword of reducing genAI's barrier to entry. On the plus side, they'll now see small-batch, artisanal forms of unwanted AI thanks to the new startups. Yay?

Anyone who holds a long position on AI stocks has technically suffered a loss. But those are unrealized losses and therefore a step shy of funny money. Share prices may very well go back up! It's bargain season if you're bullish – perhaps time to buy the dip.

Then again, share prices reflect belief – specifically, belief in a company's or sector's future prospects – so if we frame this as "the American AI sector lost $1 trillion in belief in a single day," that's a rather damning indictment. And it stems from some hard questions:

- If all you need is a laptop to run powerful AI models, why do the incumbents need all that cash for datacenters and chips?

- If DeepSeek trained their model for pennies on the dollar, what the hell have OpenAI and friends been doing?

- Have investors been paying more for name recognition than tangible value?

That takes us to our final group:

Incumbent players in the AI space have just received a harsh reminder: Your money is not a moat. You are vulnerable. The timing of the DeepSeek news caught me by surprise, but the event has always been possible. And it can happen again.

The dirty secret of AI is that money does not guarantee success. It improves the chances of building better, faster AI models; but someone operating on a fraction of your budget can still compete.

Keep this in mind when you see American (and European, to a lesser extent) tech titans brag about their AI price tags. Especially when a leaner outfit outpaces them. The history books are littered with stories of tiny, ragtag forces going toe-to-toe with larger, better-funded armies. The smaller groups learn to be effective with less. And sometimes, the bigger army is just too large for its own good.

In other news …

- Facial recognition technology will be your new officemate. (Le Monde 🇫🇷)

- Peter Tchir asks whether the DeepSeek news of "cheap AI" represents a black swan event. (The Street)

- The impact of AI on the "human element" of work. (The Guardian)

- Google and Microsoft are cramming AI tools into their respective office suites – and adjusting prices accordingly. (TechCrunch, Bloomberg)

- An AI misinformation expert used ChatGPT to write their court filing for an AI case. The bot

hallucinatedcompletely made up some citations. (Reuters) - Microsoft claims DeepSeek models are built on OpenAI's work. No idea whether it's true. But given OpenAI all of the copyright infringement lawsuits it's fighting, this is a real pot/kettle situation. (Semafor)