#030 - The countdown to zero

What happens when AI's various costs dwindle?

You're reading Complex Machinery, a newsletter about risk, AI, and related topics. (You can also subscribe to get this newsletter in your inbox.)

Last time I promised more thoughts on the cost of AI going to zero. Consider this your four-act play on the subject.

I actually outlined most of this material months ago, but parked it because I kept running out of space in the newsletter. Then DeepSeek made the topic timely again by releasing its "R1" AI model as a free, open-source download. That explains the first three acts.

The fourth act was inspired by a sharp-eyed subscriber. They saw my note about "next time" and, as though they'd peeked at my outline, kicked off a conversation about what was on tap for today. They also brought up something I'd originally planned to skip. I took that as a sign it was indeed relevant. So I've included that topic as well.

(To that person: You know who you are. And thank you.)

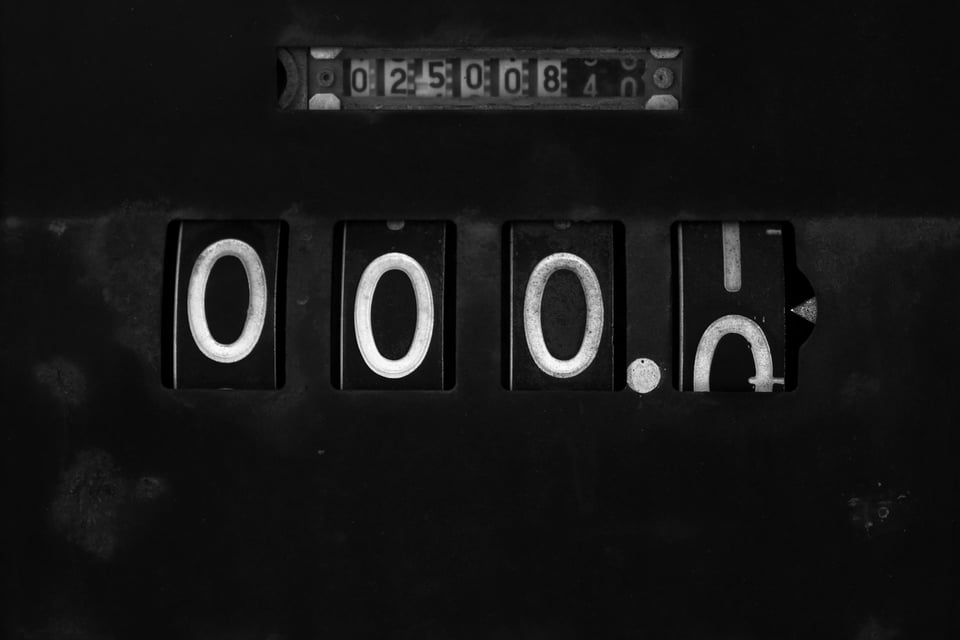

Act I: Seeing zero in the distance

Chris Anderson's 2009 book Free explored the shift from physical to digital goods. Or as he put it, the move from shipping atoms to shipping bits. Sixteen years on it seems a quaint read in our largely digital world, but there are some timeless ideas in there. One in particular has stuck with me:

Anticipate the Cheap

When the cost of the thing you're making falls this regularly, for this long, you can try pricing schemes that would seem otherwise insane. Rather than sell it for what it costs today, you can sell it for what it will cost tomorrow. The increased demand for this lower price will accelerate the curve, ensuring that the product will cost even less than expected when tomorrow comes. So you make more money.

I've stored a shorter yet wider-reaching version in my brain:

If the cost is pointing to zero, assume it's already zero and plan accordingly.

Anderson was talking about the price drop in computer components and internet connectivity. We've seen a similar drop in the cost of neural networks, the technology behind a lot of ML/AI and all genAI models.

Neural nets' price tags originally put them squarely in FAANG territory, far out of reach of smaller outfits. Hardware prices eventually fell and the technology improved, to the point that anyone with a decent computer could build their own models. (Sort of. For a while the AI crowd had to compete with crypto miners for GPU cards.) That led to pre-training, in which one group does the work to train a base model that others can fine-tune for their particular use case.

You can see today's LLMs as super-sized pretrained models. They are children of the Costs Pointing to Zero phenomenon, and will be the parent of many more.

Act II: Decomposing the total cost

In discussing the DeepSeek release most people focus on the dollar cost of the model itself. That's important but it's only one element of the total cost of building and operating a model. So when we think about the cost of AI going to zero, it helps to think about other contributing factors to that total.

The five cost elements below hold for predictive-style ML/AI as well as today's generative models:

1/ Invoking third-party models. I use the term "AI as a Service" (AIaaS) for those models that live behind API calls. This includes most genAI offerings. While AIaaS prices aren't quite "zero," they're accessible and on a downward trend. A monthly subscription will blend in with the streaming services on your credit card statement. Or if you're paying a la carte, testing an idea will run the cost of a sandwich.

I expect AIaaS companies will cut prices over time. Then again, it's not clear whether AIaaS providers will do this in light of technology advances and improved economies of scale, or because they're keeping prices artificially low in order to claim market share. We've seen both before: the former, from traditional cloud firms; the latter, from ZIRP-era gig-economy companies. You know, the ones that hiked prices when that sweet sweet VC money ran low.

2/ Training and running self-managed models. The headline on the DeepSeek news was that you could get a genAI model for free and run it on your own hardware. The more subtle, underappreciated point is that they were able to train their model for $5 million – about ten percent of the cost of the big-name LLMs.

Since DeepSeek has published their playbook, other companies can follow suit. Expect a series of well-funded players to create smaller, domain-specific AIaaS offerings. Such models will exhibit improved reliability over their large, generalized counterparts by narrowing the scope of what they might say. (If you've only trained your model on, say, financial data, then it's unlikely someone will convince it to utter racial epithets or the recipe for napalm. Emphasis on "unlikely.") "Reduced risk" will therefore be a key element of their sales pitch.

As that $5 million price tag falls, some companies will skip AIaaS altogether to build their own models in-house. They'll benefit from improved privacy (data and queries will remain inside their walls), company-specific generated artifacts (as the models will be trained on their own data), greater trust in the model (since they'll know exactly what went into the training data), and more clarity on pricing (because they're no longer paying a vendor for each token that goes back and forth across the wire). In-house models will encourage more experimentation, leading to more worthwhile, business-specific use cases. This will trigger a virtuous cycle in which businesses alternate between improving operational matters (deploying models, building safeguards) and even more experimentation.

There's still the question of whether you should bother to train your own LLMs. A generalized model from a major AIaaS provider has a ton of financial resources and industry talent behind it. There's a chance those AIaaS models will outperform and out-protect anything you build yourself. But if the price to train in-house gets low enough, that won't matter. You'll be able to treat custom models as lottery tickets: "if it works, I win; if it fails, I won't miss the money."

(Readers from the quant finance space will recognize a parallel with trading strategies. Once you develop proficiency in researching and implementing models, you can afford to run them for a short while and throw them away when they stop producing revenue.)

3/ The damage caused by models behaving badly. (With apologies, and respect, to Emanuel Derman.) AI model performance today ranges from "pretty good" to "laughable." The impact of that track record – the cost of the models being wrong – varies by the use case. Sometimes it's fractions of a penny, as with online ad placement. Sometimes it's your entire business, like when the system places a series of ill-fated orders in rapid succession.

While this cost will never be zero – no model is perfect – progress in three areas will reduce the pain to a manageable level:

R&D: Better training techniques and specialized models will improve overall performance.

AI literacy: We'll get better at matching tasks to what the models can actually do, instead of what we hope they'll do.

Deployment: Stronger safeguards will protect against accidents and bad actors.

That said, this is a touchy place to play the "assume the cost is already zero" game. We don't want to pretend the models already perform well and push them out to the public. (That's the situation we already have today.) But we can plan for that future, and prepare to shift business models when the time is right.

4/ The damage from AI working as advertised. As AI technology gets better, the harm caused by deepfakes gets worse. Clearer regulation – the kind that actually gets enforced – will reduce this cost, but only if we have the tools that reliably detect generated content in real time. That's how we stop fraudulent transactions and prevent fake videos from going viral.

We'll get there eventually. But not for a while. I expect this will be the last AI cost to truly approach zero.

5/ AI hype. The simple truth is that vendor pitch teams emphasize what AI might do in the future, but they sell it as though it's already working today. This cost is borne by buyers of overhyped AI solutions (products which were released too soon) and by anyone subject to them (say, people mistakenly tagged by faulty fraud detection tools).

The cost of inflated expectations isn't so much pointing to zero, but the conditions for the inevitable market correction – under which the cost will dramatically fall – loom large. The correction becomes more likely every day that AI's capabilities don't match up with providers' claims.

Such a correction will improve the lives of AI buyers by wiping out a lot of the snake oil. It will also wipe out the revenues of AI bullshitters, which is fine by me.

Act III: Adding up zeroes

I noted before that free, open source tools gave rise to cloud computing. Falling costs for storage and compute capacity encouraged companies to collect and analyze more data, contributing to the birth of data science. Combined, all of that paved the way for genAI. I wonder, what will be the long-term impact of (as-good-as-)zero-cost AI?

We still have time to figure that out. While these costs are all staring at zero, some are moving faster than others. And some will stall in light of increased hype and lax regulation. But eventually, the falling costs of AI will put models in the hands of more people, leading to more exploration and more use cases. These people create new interfaces beyond today's API calls and text input boxes, which will open up new ways for people to use these tools, pushing AI into new domains. I can't wait.

Act IV: Zero isn't free

Some AI costs are simply the product of applying technology to a business. It's unlikely these will ever go to zero. Some will actually increase as AI finds its way into more corners of the business.

Take, for example, implementation costs. Your CTO and CISO will have a lot to say about building out the infrastructure to train and host those models in-house. Then you have the cost to wire those AI models into your existing software applications and workflows. You can trick yourself into thinking that this work is essentially free because salaried employees will do it. But there is no free lunch. While the price for employees' time won't appear as new entries on your balance sheet, other initiatives will suffer.

Then there are the adjustments to the business itself. Once those models are wired into your technology stack, they'll impact operations and processes. You've already considered how automation in one department will shuffle your org chart and require some team members to retrain. But how will that automation impact downstream processes? Will you also have to look into automating the affected departments?

Lastly, legal and PR matters will exist so long as you're in business. As AI becomes more accessible and people uncover new use cases, they'll undoubtedly run afoul of existing laws and social norms. They'll also inspire new regulations, and new social boundaries. It would be wise to keep an eye on all of this, which is another cost element in your total AI spend.

In other news …

The AI companion bot's latest line of defense: a claim that curbing unsafe output would amount to censorship. I don't think this one will fly, but hey, someone's trying it anyway. (MIT Technology Review)

At some point I'll get around to that writeup on AI agents. In the meantime, here's another reminder that agents are currently more talk than action. (WSJ)

We know, anecdotally, that AI chatbots talk a lot of nonsense. A BBC research team has put numbers behind that idea. (BBC)

A look at how genAI has changed the landscape of student cheating. (Le Monde 🇫🇷)

GenAI gets a lot of attention these days, but let's not forget about an older data sin: the way websites (cough Facebook cough) stalk you from site to site. (The Guardian)