#049 - Looking for a grenade in a haystack

The meltdown of auto parts company First Brands may tell us about lurking problems in the genAI space.

You're reading Complex Machinery, a newsletter about risk, AI, and related topics. (You can also subscribe to get this newsletter in your inbox.)

Castles in the sand

Unless you keep up with finance news, you may have missed the growing concern over auto parts conglomerate First Brands Group. The problem? The company's balance sheet appears to have been a sandcastle, and the tide's just come in. The surprise bankruptcy could leave lenders on the hook for billions of dollars.

(Before you say it: no, you're thinking of Tricolor, which is a different car-related entity that has come under fire for alleged accounting fictions..)

An auto company meltdown may appear to stretch Complex Machinery's charter of "risk, AI, and related topics" but it's very much "risk" and warning for "AI." First Brands might serve as a parallel to the genAI wave. Perhaps an omen.

Which genAI companies are poised to leave creditors high and dry? That remains to be seen. But we can work through some ideas.

Let's start with the well-known LLM providers. They've long been in the news due to lawsuits, around accusations of copyright infringement and alleged connections to mental health incidents. Such lawsuits could damage a company, for sure, and position it for an unexpected wind-down.

Furthermore, some genAI companies acknowledge that their field is a bubble. They even dare to call it a "good" bubble. A collapse could be like First Brands several times over.

More recently, OpenAI has made headlines over its business model. That includes the CFO first hinting at a government backstop to the company's activities, followed by the quick about-face to say that they did not really mean "backstop" despite having used that very term. Plus there's the drag OpenAI has had on tech stocks. A WSJ piece notes that the real money is in selling services to the big AI companies, which are "losing money as fast as they can raise it, and plan to keep on doing so for years."

("Years" might be a stretch, but I get the point.)

It's the old picks-and-shovels business model, just on a larger scale. This time the mark customer is ten times as self-assured as any gold rush hopeful.

The theory of empty buildings

That covers the well-known companies. But remember that First Brands is only making headlines because of its meltdown – eight weeks ago it was relatively unknown outside of the lending space. It's entirely possible that a big genAI sandcastle will be a similar Wait, Who?? situation. Perhaps one lurking in the datacenter-construction space.

A mismatch between datacenter supply and demand could trap two flavors of business: the kind that's fudging its numbers and praying that it all holds together, and the kind that is honest but will get overwhelmed by a system shock. Or, better put: the kind that is aware of the impending danger (because it's the face in the mirror) and the kind that is not (because they're doing business with the first group).

That leads to questions. A lot of questions. The first is whether all of these datacenters will turn profit. That, in turn, leads one to ask whether their customers – large genAI companies – can make good on their lofty promises. Then there's the question of whether the datacenters will be up and running in time for anyone who might be interested in using them. You see, in some areas there's a two- or three-year wait for the local power utility to connect these empty structures to electricity.

That's right: some of these buildings are ghost towns. Which means they're a drag on someone's balance sheet.

The idea of building out datacenters before they're able to operate really captures the spirit of genAI's speculative fervor. It'd be comical if it weren't so sad. Even sadder is to see genAI hopefuls using genAI tools to get nuclear power for genAI datacenters.

These datacenter investments aren't exactly chump change, either. Per that article about the three-year wait:

Blue Owl Capital announced more than $50 billion of investment in data centers in September and October, including $30 billion for a Meta Platforms Inc. in Louisiana, and more than $20 billion with Oracle Corp. in New Mexico. The investment firm has 1,000 people at Stack to design, build and operate data centers, co-Chief Executive Officer Marc Lipschultz said on an Oct. 30 call with investors.

Follow the money

Investor money movements may also serve as a warning sign. SoftBank recently sold off more than five billion dollars of Nvidia shares in order to pour that money into OpenAI.

Before you interpret this as a warning against the former and green light for the latter, remember that SoftBank has a … special talent for spotting winners: it has invested in such illustrious companies as WeWork and Greensill Capital. (And per Duncan Mavin's excellent book Pyramid of Lies, even WeWork's Adam Neumann smelled something amiss with Greensill. That tells you a lot.)

Actually, no. Investments won't tell you anything just yet. We're still neck-deep in Just Throw Money At It territory.

The nose knows

While I can't tell you which genAI companies are economic time bombs, I can assure you that each one tells a good story. It's how they entice investors and buyers. Jeff Ryan (founder of QuantKiosk and fellow die-hard realist follow-the-numbers guy) summed up the genAI investment scramble better than I ever could:

It is shocking how easy it is to fool people.

All of the people. All of the time.

I'm sure First Brands had a good story, too. So good that lenders, including the folks at Jeffries, claim that there's no way they could have seen any trouble coming.

Hmm.

The thing with stories is that you don't have to accept them as-is. You can ask follow-up questions.

I'm not saying that "ask questions" will definitely uncover fraud. But it seems that "ask questions, then ask again when you get a weak answer" would be one hell of a start. Do you sense something is off? Keep digging. That's how you find out.

Some groups avoided exposure to First Brands by doing just that. While I commend them on a job well done, I'll also note that they did not have to go very far. The steps described in this Financial Times write-up fall under first-level due diligence. Like, say, a little game of Hey Can We See The Merch You're Claiming As Collateral? No? OK, Then We're Out.

The same holds for genAI. There are plenty of stories out there. Some are very far from reality, held aloft by a confident tone of voice and the sentiments of an extended bull market. If we don't ask enough of the right questions, we'll only get the answers after it's too late. See: the people who never asked "hey why do you say that home values can only go up?" in 2006 and 2007.

When it comes to a bull market, your best line of defense is a keen sense of smell.

Lucky number seven

Seven lawsuits landed on OpenAI's doorstep last week. (CNN, Le Monde 🇫🇷, AP)

Those seven join the list of lawsuits filed against other genAI companies, which allege that their chatbots played a role in some disturbing mental health episodes – including suicides.

I won't claim that these products are responsible. That's for the courts to decide.

But I will point out that if someone keeps showing up at crime scenes, we'd have a right to ask them what's the deal.

Counting calories

To end on a lighter note…

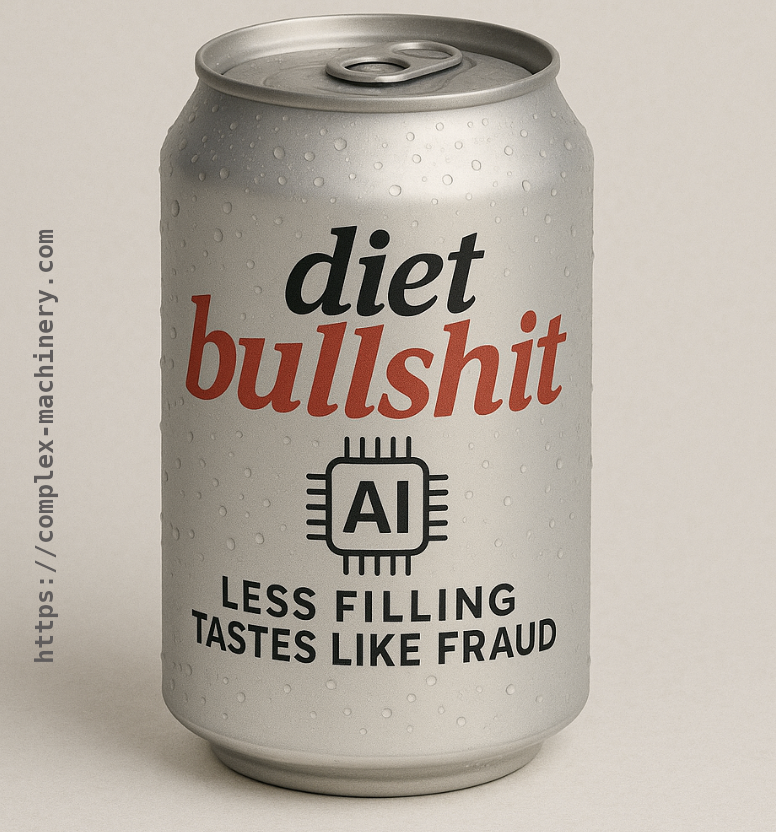

In the last newsletter I pointed out that crypto is bullshit and that genAI, its more socially-acceptable sibling, is "diet bullshit" by comparison.

In response, a reader sent this image my way:

(And yes, they generated it with AI. Image shared with permission, though the creator – "prompter?" – asks to remain anonymous.)

Say what you will about crypto, but I respect that the diehards had the self-awareness to mock themselves every time they lost out. They smiled through the pain and kept going.

I don't expect the genAI crowd will be as brave.

Now if you'll excuse me, I have to dust off some old crypto memes…

Recommended reading

I've just released my latest book, Twin Wolves: Balancing risk and reward to make the most of AI.

This is a tight, executive-level read on how to approach AI – both ML/AI and genAI – in your company. Try to see it as having a conversation with me about your AI options. Without, y'know, having to actually talk with me.

It's available in DRM-free PDF and EPUB formats, and for the time being it's offered under a pay-what-you-want(-with-a-minimum-price) model.

Feel free to spread the word on Bluesky or LinkedIn, if you hang out there.

In other news …

- I recently came across a 2009 paper on lessons from the 2008 mortgage meltdown, aka the Great Financial Crisis (GFC). No clue why I've been digging into the GFC again as I read about AI news. No reason whatsoever. (Federal Reserve Bank of New York)

- It seems like attorneys really dig using genAI bots in their work. Which is fine. But they aren't so good at, y'know, checking the bots' work after the fact. (New York Times)

- Aspiring legal professionals also use genAI bots. The results are about the same. Just ask Kim Kardashian. (Gizmodo)

- Still, Thomson Reuters wants to provide genAI tools to attorneys. (Business Insider)

- Humans are, once again, the weakest link. That daring Louvre heist? At least one element involved a weak password – "Louvre" – on a key security system. (ABC News)

- A look into the physics-defying Saudi Arabia "Neom" project, which originally included plans for an office building suspended like a chandelier between two structures. If you think this has no relation to big genAI projects, please think again. (FT)

- Microsoft built a synthetic marketplace in which to test genAI agents. That exercise showed precisely why you want to test your genAI agents in a controlled environment. (TechCrunch, The Register)

- Meta compares its controversial new AI glasses to iPhones. The folks at 404 Media explain why the devices are completely different. (404 Media)

- Today's episode of Adding AI To A Product Doesn't Make It Better, starring Google's Nest Cameras. (New York Times/Wirecutter)

- Ryanair is doing away with paper boarding cards. (Der Spiegel 🇩🇪)

- Remember the old Apple slogan, "there's an app for that?" We need to update that to "there's a chat(bot) for that." Because we have gone so far that we now have chatbot Jesus. (NBC Philadelphia)

- Jeff Bezos launches a new AI company. Because, I guess, why not? (New York Times)

- Thoughts on AI-generated music, including a recent country-charts hit. (Le Monde 🇫🇷, Financial Times)

- Anthropic forges partnership with Sweden's IFS for "industrial AI." (Les Echos 🇫🇷, The Observer)