#053 - Amateur hour

The genAI hype wave has become a multi-trillion-dollar open mic night.

You're reading Complex Machinery, a newsletter about risk, AI, and related topics. (You can also subscribe to get this newsletter in your inbox.)

Risk redux

In 2022 I wrote a short piece called "The Top Sources of Risk Facing the AI Sector." This was a time when "AI" meant "ML/AI," several months before generative AI claimed the acronym as its own.

I'll spare you the full read. The gist was that AI poses the greatest risks:

Where it's not providing value – you're paying for it, but it's not giving anything back

Where it's not being planned or managed well – mismanagement opens the door to all kinds of problems

Where it's oversold – in this case, you're using it in a space where it won't ever work

Where it could provide value but it's not – missed opportunity means missed revenue

I'd been meaning to update that list for our current wave of genAI. After reviewing the article, almost four years on, I'll note:

1/ Most of what I wrote about ML/AI applies just as well to genAI/LLMs. This isn't too surprising. Despite what the hype machine wants us to believe, the current technology isn't much of a departure from its predecessors. It's larger, yes. And more popular, sure. But I often remind people that genAI is pretty much an ML classifier running in reverse. That leaves a wide array of possible use cases, but also defines some boundaries.

2/ Some of those risk exposures have grown, even. This is mostly due to genAI's popularity, which in turn stems from the hype machine working in overdrive.

Combined, items 1 and 2 almost led me to skip the rewrite as there wasn’t much to add. But then I realized that there are indeed new downside exposures:

3/ Related to item 2, the genAI hype has extended its reach into our business and financial systems. If genAI were delivering as promised, this would be fine. But it's not. When you consider the amount of money pouring into the sector, paired with the increasingly low probability that the sector delivers, the mountains of debt – today we have financial debt, in addition to the social and technical debt I wrote about in 2024 – are rather worrying. Even worse is the way genAI companies are getting creative with the numbers. One reason to hide shuffle massive amounts of debt is to … make it easier to take on more debt because your balance sheet looks healthier.

When this unwinds it will be a rather grotesque match of musical chairs.

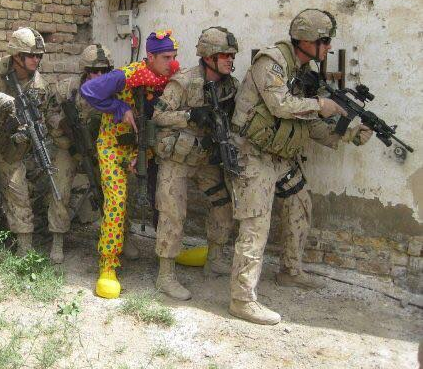

4/ Generative AI's reach has attracted quite a bit of amateurism to the field. Amateurs and money do not mix well. Or, better put: they mix a little too well. Right as I released Complex Machinery #051 at year-end, I saw a Bloomberg article on the datacenter gold rush. It pointed out that a number of new players in the space had big dreams but zero experience. I joked to myself that diving in to make some easy money was an emerging-tech staple. GenAI would be no exception.

And that's precisely the problem.

Hiding in the layer cake

The AI-eager executives are hungry for information and implementation. That's led to an industry of newly-minted AI "experts" to guide them, newly-minted practitioners to build the products, and now newly-minted datacenter providers to host it all. The genAI space has amateurs at every level.

This isn't to say that everyone here is a cosplaying amateur. For one, that's simply not true. There are plenty of experienced professionals in this field. Including the author of this fine newsletter.

Two, if everyone involved were an amateur, that would be easy to handle. Just stay the hell away from genAI and all will be fine.

The problem is that the amateurs aren't clearly visible. And that has allowed the play-actors to slip into various levels of genAI's layer cake:

For the executive in search of guidance, the seasoned expert and the loudmouth with a podcast look the same. (In some cases, the amateur looks better than the expert, because they're telling the buyers what they want to hear. Even when it conflicts with reality.)

The product that actually works looks the same as the product that doesn't. At least, for now.

Even worse, let's say an executive works with a genuine expert and develops a sound, risk-managed approach to their company's AI transformation. A few levels down, their business may ultimately run on a datacenter managed by Andy Amateur.

On that last point you'll tell me that every provider suffers an outage now and then. Even big players. This is true! Every time AWS region us-east-1 falls over, it takes half of your apps with it. But keep in mind that AWS is run and staffed by infrastructure experts. Their preventative maintenance staves off all kinds of problems. And when things inevitably go wrong, they have the instrumentation and expertise to resolve it in short order.

What do amateur datacenter operators have during a disaster? Mostly, hand-waving. Hand-waving and excuses. And their excuses won't placate your angry customers.

There are also concerns for the genAI investor. They're already taking a chance that there will be demand for the services built on this technology. Given all of the debt that is driving the datacenter deals, they're saddling the additional risk that their investments won’t have a place to run. (Like, say, the datacenters that have been built but are likely two years away from getting power.)

I was about to liken the genAI scene to an open mic night. You might get a professional comedian testing their new material. You might just as well get a complete novice. You might even get an agitator who is using their stage time as a platform for hateful rhetoric. You won't know till it's too late.

But that felt like too frivolous an analogy. This is real money, serving real businesses, which in turn serve real customers. The tiers in this layer cake are akin to tranches in a debt instrument. That makes the genAI amateur more like a 2007-era collateralized debt obligation (CDO), which was in turn a twisted game of Where's Waldo: Which mortgages are clean? And which are about to fail? Because they're all packaged together into one bundle called "AI companies" and even the allegedly "safe" bets are a step shy of collapse.

Getting a good vibe from this one

Vibe coding lives at a strange crossroads. In the hands of an experienced software developer, LLM-generated code can boost productivity by a noticeable margin. In the hands of an entry-level developer or hobbyist, it can create apps that barely work or don't work at all.

Most people would chalk this up to the pro being able to inspect the LLM's output and correct problems in the code. That certainly helps! But a typical software project involves so much more than code. There are test suites and best practices and different stages of migration. There are product people and QA teams and infrastructure specialists, the combined efforts of which create a system of checks and balances to keep everything on the rails.

One under-appreciated danger of vibe coding, then, is that someone who does not embody these best practices can publish an app that only looks like it's fit for purpose. That's fine for a hobby app that has an audience of one. It becomes a problem when that app serves a business with real customers.

To drive home this point about best practices and standards, we can wind the clocks back to a time before vibe coding. Let's say 2014, when the crypto exchange Mt. Gox is in full swing:

Mt. Gox, he says, didn't use any type of version control software -- a standard tool in any professional software development environment. This meant that any coder could accidentally overwrite a colleague's code if they happened to be working on the same file. According to this developer, the world's largest bitcoin exchange had only recently introduced a test environment, meaning that, previously, untested software changes were pushed out to the exchanges customers -- not the kind of thing you'd see on a professionally run financial services website. And, he says, there was only one person who could approve changes to the site's source code: Mark Karpeles. That meant that some bug fixes -- even security fixes -- could languish for weeks, waiting for Karpeles to get to the code. "The source code was a complete mess," says one insider.

The cost of this amateur approach to software development? Just a theft of $460 million. Nothing to worry about.

What will be the vibe-coded version of this tale?

The opposite of hedging

Moving on from amateur hour, we have the full-on shit-show.

If you've been on a self-imposed news blackout, this would be a great time to close this newsletter and go about your day. But if you insist on reading:

The X Formerly Known As Twitter has turned into a factory of non-consensual deepfake content. As I noted in In Other News:

Grok, the Twitter-based genAI chatbot that was designed to be anti-woke and extremely permissive, has – shocking – been churning out deepfake images that sexualize minors. Despite wall-to-wall news coverage, widespread public outcry, and potential legal action from multiple governments, parent company xAI has yet to issue any substantive explanation or correction. (Le Monde 🇫🇷, Ars Technica, Politico, CNBC, The Atlantic, Les Echos 🇫🇷, The Times UK, The Guardian, Washington Post, and many more ...)

In light of the Grok news, the Financial Times Alphaville division has provided a rundown of the Twitter/X org chart.)

That was last week.

Grox has since been blocked in Indonesia and Malaysia, and other world governments have considered taking similar action.

Hmm.

A wise risk manager once told me: The opposite of hedging? That's when you double down.

No clue why I am thinking of that right now. But, completely unrelated, Twitter has continued to not fix the problem of its mass-scale CSAM machine. In fact, like a chainsaw-wielding maniac let loose in an office building, Twitter reportedly unveiled a new policy in which the disturbing content would live behind a paywall. I mean, yes, I wager the criminally perverted would make for a good source of income (until they are apprehended by law enforcement) but that still doesn't make it a good idea.

And I emphasize "reportedly" there, as I've also seen claims that the paywall was simply a hallucination from Grok.

The fact that both stories sound equally plausible tells you everything you need to know about the state of Twitter.

Either way, Grok's host is sticking to its guns and claiming that banning Twitter would be an attack on free speech.

As an amateur linguist I realize that words change meaning over time. But I must have missed the memo on "free speech" shifting to "on-demand creation of disturbing and sometimes illegal sexual material."

Can't wait to see what they do with "freedom."

Mixed messages

I’ll close out with an episode of The Left Hand Disavows All Knowledge Of What The Right Hand Has Been Up To:

OpenAI is working to settle lawsuits that accuse its chatbots of driving teens to suicide.

In that same breath, OpenAI is launching ChatGPT Health and encouraging people to connect their medical records to the system.

But what about … y'know … those lawsuits? The alleged ties to extreme mental health incidents? Would that not shake our confidence in this new health-related offering?

Not to worry. OpenAI assures us that the system is "not intended for diagnosis or treatment."

And also, "HIPAA doesn’t apply in this setting."

Well, I certainly find that reassuring. Don't you?

In other news …

Tim O’Reilly's take on where the AI-driven economy could and should go. (O'Reilly Radar)

A group of people who claim to work at big AI firms are building tools to poison AI data collection. (The Register)

Remember Uber's "greyball" tool? Meta saw that idea and said "hold my beer," allegedly creating an entire playbook for dodging government regulators around the world. (Reuters)

If you don't recognize the name Yann LeCun, just know that he's 1/ well-known in AI circles and 2/ recently left his role at Meta. In this interview with the FT, he pulls no punches in describing life at Meta after the company built a separate AI "superintelligence' unit. (FT)

NYC grocery chain Wegmans has decided to get into the data collection game. As in, collecting customers' biometric data. And the wording on its signs does little to calm privacy concerns. (Gothamist)

A painful example of the knock-on effects of bad data: incorrect, outdated provider lists lead patients to delay treatment and sometimes shell out for pricier private care. (ProPublica)

As an attorney, have you ever thought about submitting official court docs that were generated by an LLM? Think again. (NYCourts.gov)

Since genAI chatbots tend to agree with whatever the end-user says, they're terrible tools for fact-finding. That can lead to wider social problems as people get a reinforced take on their existing world-view. (Le Monde 🇫🇷)

This year's CES was all about humanoid robots. (Les Echos 🇫🇷)

California, once again, leads the pack in US data privacy: residents can mass-delete their personal data from data brokers. (TechCrunch, Washington Post)

Microsoft CEO Satya Nadella hopes people will stop talking about genAI "slop." No details on whether genAI will actually stop creating the slop that people talk about. (Windows Central)

(For additional recent news, and with a slightly broader scope, I encourage you to check out my other newsletter. It's a weekly, curated drop of what I've been reading.)